Fixie – Testing by Agreement

I want to give you an overview of the framework and its capabilities, and understand whether it is worth checking out.

As stated on the site, Fixie is Conventional Testing for .NET, i.e. testing by agreement. ‘Agreement’ means something that we are generally accustomed to - all operations are performed on the basis of an "oral" agreement, gentlemen's naming convention. The closest example is scaffolding. This is when we agree, for example, that test classes contain the word Test, or that test classes must be public and not return anything. Then these classes will be recognized as test classes. And no more attributes, and all such things. Just classes and methods.

At first glance all of it looks good and nice. It turns out that you only need to name the methods and classes correctly and you will be happy. At the same time, you can train a team to name test classes as required, not as an arbitrary combination of words somehow related to the topic of the test class.

Installation and First Test

By default, Fixie is set up so that everything that ends with Tests is test classes. Test methods are everything within these classes that does not return values. I.e. in theory, and in fact, the following code will be recognized as a test:

Before proceeding further, it is worth to say a few words about the installation and the things required to get Fixie running.

This is the nuget command for installation of the framework itself. But it’s not everything. The framework does not provide a built-in method for writing data checks in a test. In order to use them, you need to use one of the third-party solutions:

- FluentAssertions

- Should

- Shouldly

- Something else on the same topic

Integration

Speaking of running tests. The author honestly admits that he had to run the tests from the console and so he made the integration with the studio as the last one. There is a plugin for ReSharper, but it is for versions from 8.1 to 8.3, i.e. it is not supported by the new version. I did not want to downgrade to version 8 just to run the tests, so I cannot say how comfortable it is. Integration with the studio is performed at the level of test detection and running. I.e. there will be no highlighting in the editor. This refers to special test icons.

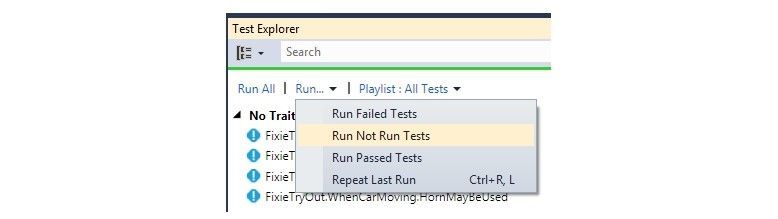

Here, in my opinion, lies a potential place for error. Typos. It is not so difficult to make a typo in the word "Tests", which will result in skipping the tests. Visually, they are not marked in any way. It is fair to say that this situation is rare and unlikely if you use the right studio tools and don’t run all the tests en masse, but, for example, only those which had not been run.

In general, you can say that given the fact that the framework is new and relatively unknown, it lacks many tools. In terms of test detection and result generation, the documentation tells us that Fixie can generate reports in the style of NUnit and xUnit, which will greatly facilitate the life of those who have a normally constructed CI.

Tuning

The main force of a framework is the possibility of flexible adjustment of how your tests will be identified, started, validated, and so on. Nothing is provided with the framework by default. However, in the repository there are many different examples. To make everything a bit more interesting, let's dig into how to set up a framework to fit your needs. For example, how to state which other classes should be identified as test classes.

All settings are made in the class constructor inherited from the Convention.

By default, the settings look like the following:

The code says that test classes must end with Tests and test methods are everything that returns null. Overall it is decent. I try to stick to the principle that a test class begins with the word When, and tests - with the words Then. This creates a rather neat picture in testrunner and when writing tests you already know what you test, and you just have to think about the effects for the test. An added bonus is that test classes are short, and responsibility between the tests does not mix. Naturally, any rule only indicates the direction, but is not a dogma. “A Foolish Consistency is the Hobgoblin of Little Minds” – so you always need to use common sense.

Looking at my list of tests, I can see that not all classes end with the word Tests or start with the word When.

There is no strict naming structure in the tests either, in the sense that the tests should begin with the word Should, for example. But in my opinion, when reading tests you are well aware what is happening there. I speak from experience. This project has lasted for 3-4 years already, with varying success, and each time I come back to it I quickly and successfully recall what has been done and what needs to be done.

In this naming mode I am a little scared (and lazy) to specify the keywords by which test classes will be identified. In addition, the When ... Then ... approach in practice means that there is a setup method that starts at the beginning of each test and the test results are either checked in the test, or the created object is somehow affected. I.e. you will have to explicitly mark or specify the setup method (SetUp).

For implementation I can explicitly call setup method for each test. Like this, for example:

Of course, in real life it is necessary to write something more informative than SetUpEnvironment (), but the idea is clear. This implementation will provide class variables that will retain their values between the tests, which can easily lead to dependent tests if I forget to write an initialization string in some of them.

Fixie offers a solution for this situation. Here it is:

I.e. you need to implement a descendant of CaseBehavior and write everything you need there. The documentation says that the implementation is:

- CaseBeh * avior = [SetUp] / [TearDown]

- FixtureExecution = [FixtureSetUp] / [FixtureTearDown]

It is not hard to demonstrate this. Let DefaultConvention be as in the example above, then, when starting the class

Nothing tells me that there is a SetUp/TearDown.

MAGIC!!! Generally, it's interesting, but not in this case. I would not want such magic on my project. This is completely contrary to the fact that tests should be transparent. Even if I write an extension for CaseBehavior in the same class, it will not solve the problem, as it will be not very obvious where to look for the class in which it will be all set. Engaging in an ongoing copy-paste is not the answer.

Let’s compare:

Versus:

There are fewer lines of code in case of NUnit, and SetUp from the class will not be lost in the project.

Maybe I'm biased and write tests incorrectly, but ... I'm not sure about that. I have not seen lots of articles stating that SetUp is evil. Well, everything can be reduced to an absurdity, and you can make initialization for half of the project there, but it's a different story. However, there is a solution for this case too. Self-made attributes can be used to identify the relevant test parts, tests, and classes. I.e. you can fully emulate NUnit, xUnit in your project.

With attributes, you can create

- support for categories

- parameterized tests

- tests carried out in strict sequence

- anything that comes to mind

And running:

Fixie.Console.exe path/to/your/test/project.dll –include CategoryA

My Solution

I can say much more about Fixie, give examples of solving various problems, but the first impression is here already. Therefore we need to wrap it up.

It turns out that Fixie is a metaframework for testing. You are free to build all sorts of rules and opportunities for testing, and they are built rather simply, to be honest. The only question is whether it is worthwhile. Is it necessary to do all this? Lack of R# support and the fact that I do not see if the test is really a test and if it is recognized by the framework is a bit discouraging. I would not use it in production, but for home use and as a promising tool Fixie is very interesting. At least, I will definitely remember it if there is some interesting and specific testing task, which will be difficult to solve by standard NUnit means.

Software Architecture and Development Consultant